Simulation: Generation IV

Sharpening up legacy codes

21 January 2011A team from Argonne National Laboratory in the USA is developing a modern set of exascale computing simulation tools for the design and study of liquid-metal-cooled fast reactors.

The simulation-based high-efficiency advanced reactor prototyping (SHARP) project at Argonne National Laboratory (ANL) is a multidivisional, collaborative effort to develop a modern set of design and analysis tools for liquid-metal-cooled fast reactors. The project’s modular approach allows users to attach new modules to legacy reactor analysis codes, thereby avoiding costly rewriting of codes while enabling the incorporation of new physics modules.

The four main project objectives are to:

- Enable both static and dynamic operating conditions to be derived from first-principles-based methodologies

- Provide ability to plug in new and different combinations of physics modules in order to study different phenomena

- Exploit state-of-the-art numerical techniques so that the codes can easily run on new platforms

- Allow development of new physics components without expert knowledge of the entire system.

The goal is to reduce uncertainty margins and to enable virtual prototyping of regimes that would be impractical from an experimental point of view.

Supporting legacy code

One of the challenges facing the SHARP team is the fact that legacy codes designed over two decades ago are still used as the standard for reactor analysis. Their weakness lies in their inability to handle designs beyond the scope of the original experiments. Moreover, the legacy solvers are insufficient for handling nuclear reactor dynamics; new solvers are needed that can exploit the power of today’s—and tomorrow’s—computer architectures to provide a highly detailed description of a reactor core.

In order to allow uninterrupted productivity by reactor design end-users, the new codes developed by the SHARP team are intended to coexist with and gradually replace legacy codes. Specifically, SHARP will support legacy code interfaces to allow users and developers to: validate a given geometry or model against current tools, without changing the geometry definition, and focus on testing and debugging a single high-fidelity module while retaining the integrated physics framework.

Integrating modules

The SHARP project seeks to provide an integrated system of software tools that accurately describe the overall nuclear plant behaviour in a high-fidelity way. Two particular components—neutronics and thermal-hydraulics—have received special attention at ANL.

Neutronics

Neutronics refers to the study of the behaviour and effects of neutrons in the reactor. The energy spectrum of these neutrons depends on the structure of the particular energy group (for example, fast groups of <100keV). Correct description of this spectrum over several neutron energy groups is critical for accurate calculations of phenomena such as reaction rates. Current neutronics analysis codes require two or more approximation and collapsing steps that reduce fine neutron energy-group structures into coarse ones. On the other hand, the neutronics code UNIC (ultimate neutronic investigation code) developed at Argonne National Laboratory seeks to eliminate this multistep procedure by exploiting advances in both numerical and algorithmic efficiency, as well as utilising the increasing power offered by high-performance computing systems.

A key feature of UNIC is its combination of different methods. For example, one method is based on the second-order form of the Boltzmann equation using a spherical harmonics angular approximation, while another method is based on the first-order integral form of the transport equation using the method of characteristics (MOC) approach. Having these various methods allows UNIC to use an MOC formulation locally, for example, to handle fuel pin heterogeneity in three dimensions, while using a spherical harmonics formulation for the remaining domain.

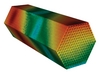

Figure 1 shows the rapid spatial variation in the high-energy neutron distribution between and within each plate along with the more slowly varying, global distribution. The figure illustrates how UNIC allows researchers to capture both of these effects simultaneously.

UNIC developers seek to solve the neutron transport equation in a detailed three-dimensional geometry with thousands of energy groups. The aim of this so-called deterministic approach is to provide the same geometrical flexibility one can achieve with Monte Carlo methods, without the approximations—or ‘homogenization’—associated with the multistep approach currently used.

To solve the large-scale linear systems that arise in the simulations, the SHARP team has created specialized implementations of preconditioners in the PETSc (portable and extensible toolkit for scientific computation) library developed at Argonne. The preconditioners transform a problem into a form more suitable for numerical solution. In particular, a conjugate gradient method is used because it is scalable to thousands of processors.

Thermal hydraulics

In addition to performing neutronics analysis, SHARP researchers are investigating the thermal-hydraulics in next-generation fast reactors. In a reactor core, approximately 95% of the heat produced by fission is deposited directly in the nuclear fuel. This heat then conducts outward through the fuel and cladding (or outer layer of the fuel rods) to the surface, where it is removed by the coolant. To study the temperature distribution resulting from this process, which determines both the thermal efficiency and the feasibility of the reactor design, researchers have developed the Nek code, a reactor-specific version of Argonne’s state-of-the-art computational fluid dynamics code that was originally designed for the study of hydrodynamics and heat transfer.

Nek simulates fluid flow, convective heat and species transport, and magnetohydrodynamics in two and three dimensions. The spectral element (or high-order finite element) method on which Nek is based yields rapid numerical convergence. This means that simulations of small-scale features transported over long times and distances incur minimal numerical dissipation and dispersion. The code also has demonstrated scalability to more than 100,000 processors.

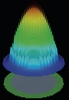

Figure 2 shows the coolant-flow pressure distribution in a 217-pin wire-wrapped subassembly. The simulation was computed using Nek5000 on P=65,536 processors of the Argonne Leadership Computing Facility’s Blue Gene/P and was carried out by Paul Fischer, Aleks Obabko, Andrew Siegel, Dave Pointer and Jeff Smith of the SHARP project team. The Reynolds number (the ratio of inertial forces to viscous forces) for this flow is Re~10,500, based on hydraulic diameter. The simulation was a watershed computation for Nek5000 as it was the first to exceed one million elements (2.95 million) and the first to use in excess of one billion grid-points.

Exploiting parallelism

A key objective of the SHARP project initially has been a loose coupling of the physics modules—the thermal-hydraulics and neutronics modules just described, as well as a structural mechanics module developed at Lawrence Livermore National Laboratory—to assess the structural integrity of the fuel assembly. Coupling allows the systems to interact and share data by sending data values between the modules through well-defined interfaces. Additionally, the coupling framework includes a collection of utility modules, for example, a code to enable researchers to visualize their data at run time.

Early reactor simulations involved numerous simplifications and homogenization techniques in order to be manageable on the then-existing resources. Such techniques, however, can greatly limit the accuracy of the solution, leaving considerable uncertainty in crucial reactor design and operational parameters.

Parallel computing (a form of computation in which many calculations are carried out simultaneously) is needed for the coupling framework to handle large-scale data movements among the different physics modules and to preserve overall accuracy without compromising the accuracy from each physics module. Parallelization is also necessary in space-angle-energy representations and for handling the billions of degrees of freedom in explicit geometry core calculations.

For the past few years, therefore, the SHARP team has been designing and implementing parallel, highly scalable algorithms that can take advantage of today’s leadership computing facilities, notably, the IBM Blue Gene/P and the Cray XT5. With the advent of exascale computing, the SHARP team hopes to transition from assembly-level homogenization to less crude homogenization and eventually to fully heterogeneous descriptions.

Validating the codes

For the past few years, Argonne researchers have carried out highly detailed simulations of the Zero Power Reactor, on which physics benchmarks had been conducted at Argonne almost 30 years ago. Because those early benchmarks were designed to test fast reactor physics data and methods, they were as simple as possible in geometry and composition and hence make excellent test cases for validating today’s new simulation codes.

In particular, in 2009 the Argonne team carried out highly detailed simulations of the Zero Power Reactor experiments on up to 163,840 processor cores of the IBM Blue Gene/P and 222,912 processor cores of the Cray XT5, as well as on 294,912 processor cores of a Blue Gene/P at the Juelich Supercomputing Centre in Germany. The researchers successfully represented the details of the full reactor geometry for the first time and have been able to compare the results directly with the experimental data.

A video simulation of the Zero Power Reactor experiment is available online: www.tinyurl.com/yj9bxg6.

The next stage

The SHARP team will be designing better algorithms that reduce communication overheads, that balance the computational load on each processor more effectively, and that do not exhaust memory so rapidly. The intention is to progressively replace existing, highly homogenized techniques with more direct solution methods based on first principles. The long-term objective is to replace expensive mockup experiments and reduce the uncertainty in crucial reactor design and operational parameters.

This work is part of a broader initiative to incorporate fundamental physics simulations as a key ingredient in the design and evaluation of next-generation nuclear facilities, reducing dependence on costly experiments and facilitating the development of verifiably safe designs.